NVIDIA Puts Grace Blackwell on Every Desk and at Every AI Developer’s Fingertips | NVIDIA Newsroom

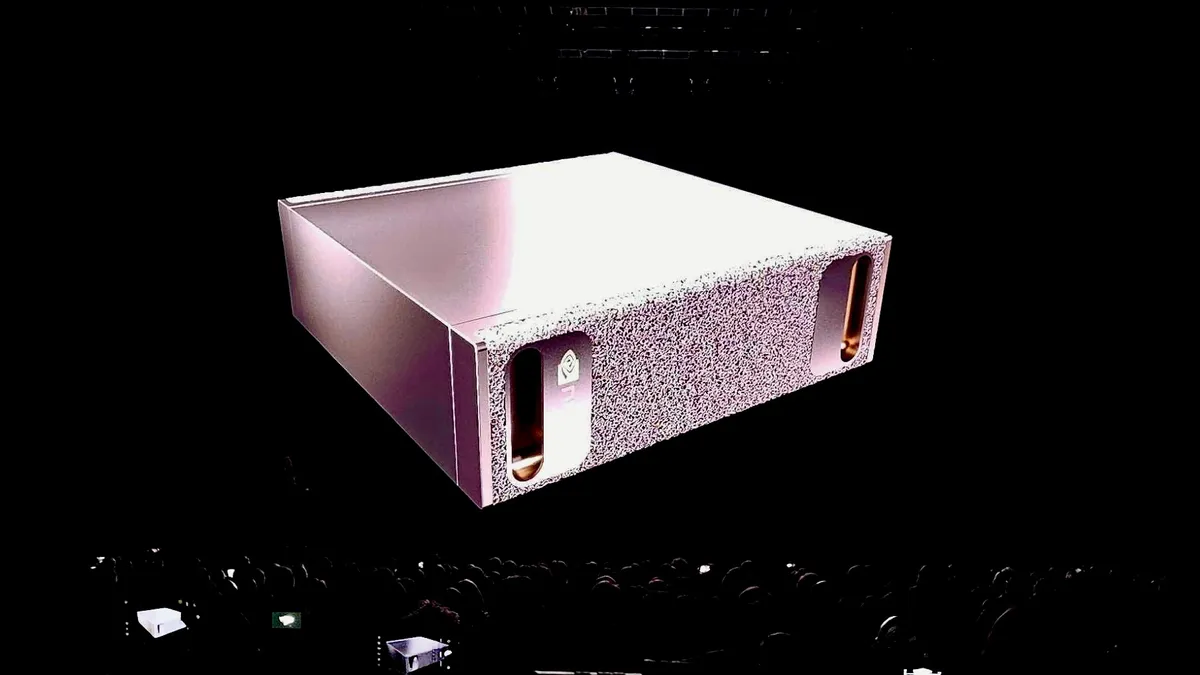

NVIDIA Project DIGITS With New GB10 Superchip Debuts as World’s Smallest AI Supercomputer Capable of Running 200B-Parameter ModelsProject DIGITS will be available in May from NVIDIA and top partners, starting at $3,000.

Developers can fine-tune models with the NVIDIA NeMo™ framework, accelerate data science with NVIDIA RAPIDS™ libraries and run common frameworks such as PyTorch, Python and Jupyter notebooks....

NVIDIA NeMo™ is an end-to-end platform for developing custom generative AI—including large language models (LLMs), vision language models (VLMs), video models, and speech AI—anywhere.

Deliver enterprise-ready models with precise data curation, cutting-edge customization, retrieval-augmented generation (RAG), and accelerated performance with NeMo, part of NVIDIA AI Foundry—a platform and service for building custom generative AI models with enterprise data and domain-specific knowledge.

Deliver enterprise-ready models with precise data curation, cutting-edge customization, retrieval-augmented generation (RAG), and accelerated performance with NeMo, part of NVIDIA AI Foundry—a platform and service for building custom generative AI models with enterprise data and domain-specific knowledge.

...groundbreaking RTX 50 series GPUs powered by the Blackwell architecture.

...revolutionary advancements in AI, accelerated computing,

and industrial digitalization transforming every industry.

...revolutionary advancements in AI, accelerated computing,

and industrial digitalization transforming every industry.

DIGITS: Deep learning GPU Intelligence Training System

size of Mac Mini (or Mini PC)

+