Data Newsletter - O'Reilly Media

"...Notebooks and workflow tools will continue to evolve.

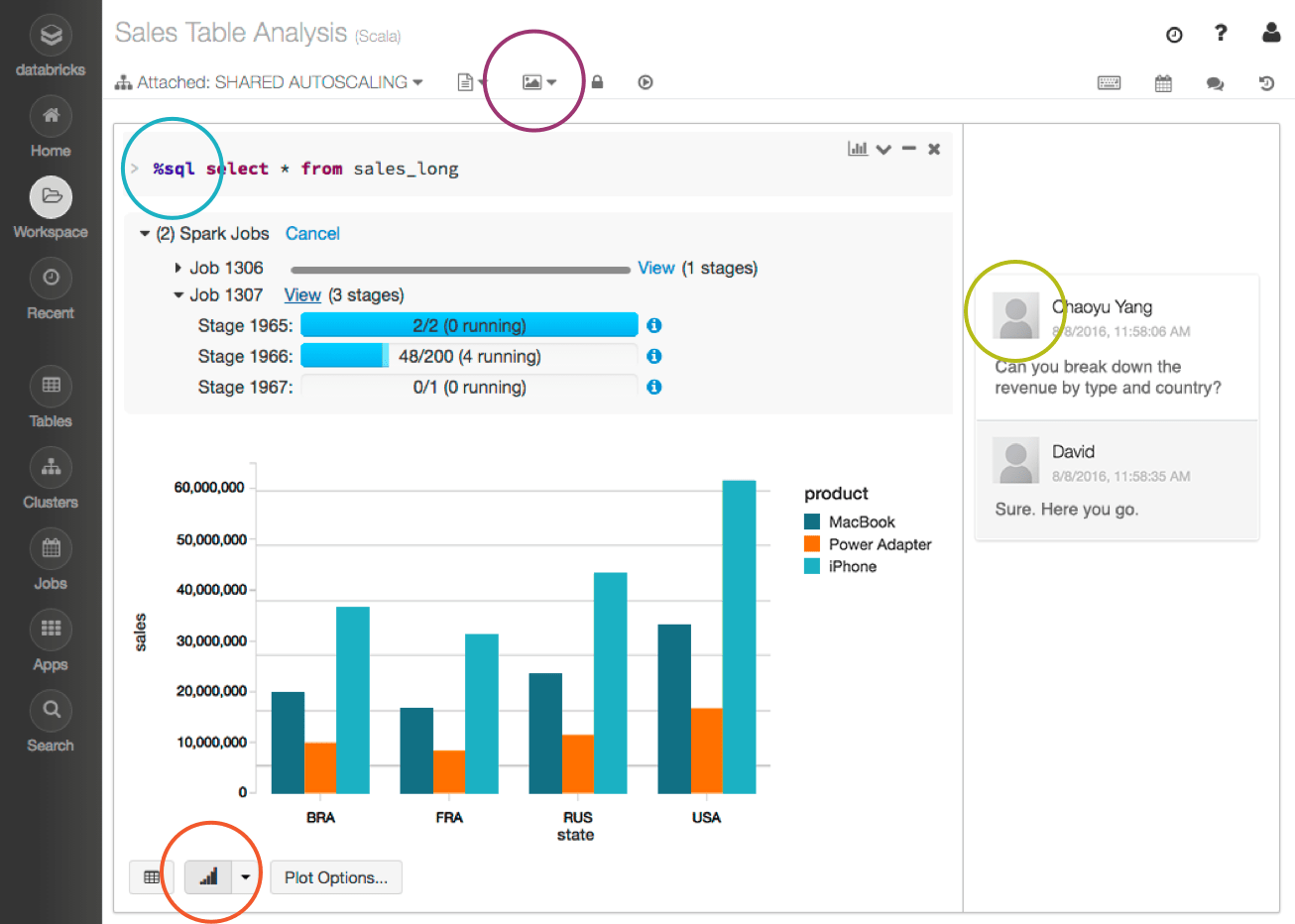

Jupyter Notebook is widely used by data scientists because it offers a rich architecture of elements that can be used and recomposed for a broad range of problems, including data cleaning and transformation, numerical simulation, statistical modeling, and machine learning. (O’Reilly uses Jupyter Notebook as the basis for Oriole Interactive Tutorials, for example.) It’s useful for data teams because you can create and share documents that contain live code, equations, visualizations, and explanatory text. And by connecting Jupyter to Spark, you can write Python code with Spark from an easy-to-use interface instead of using the Linux command line or Spark shell.

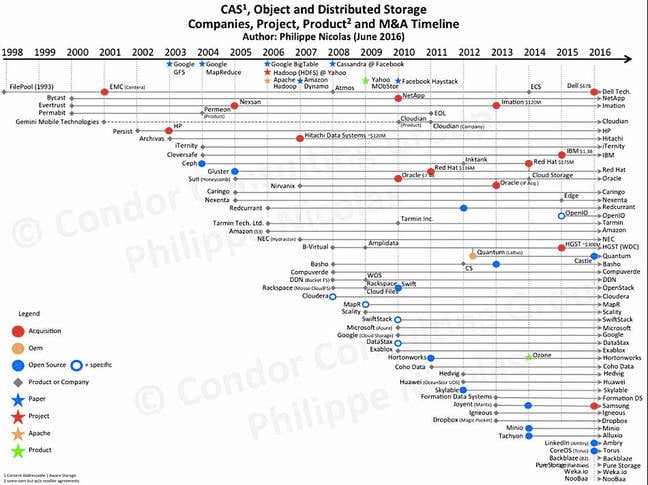

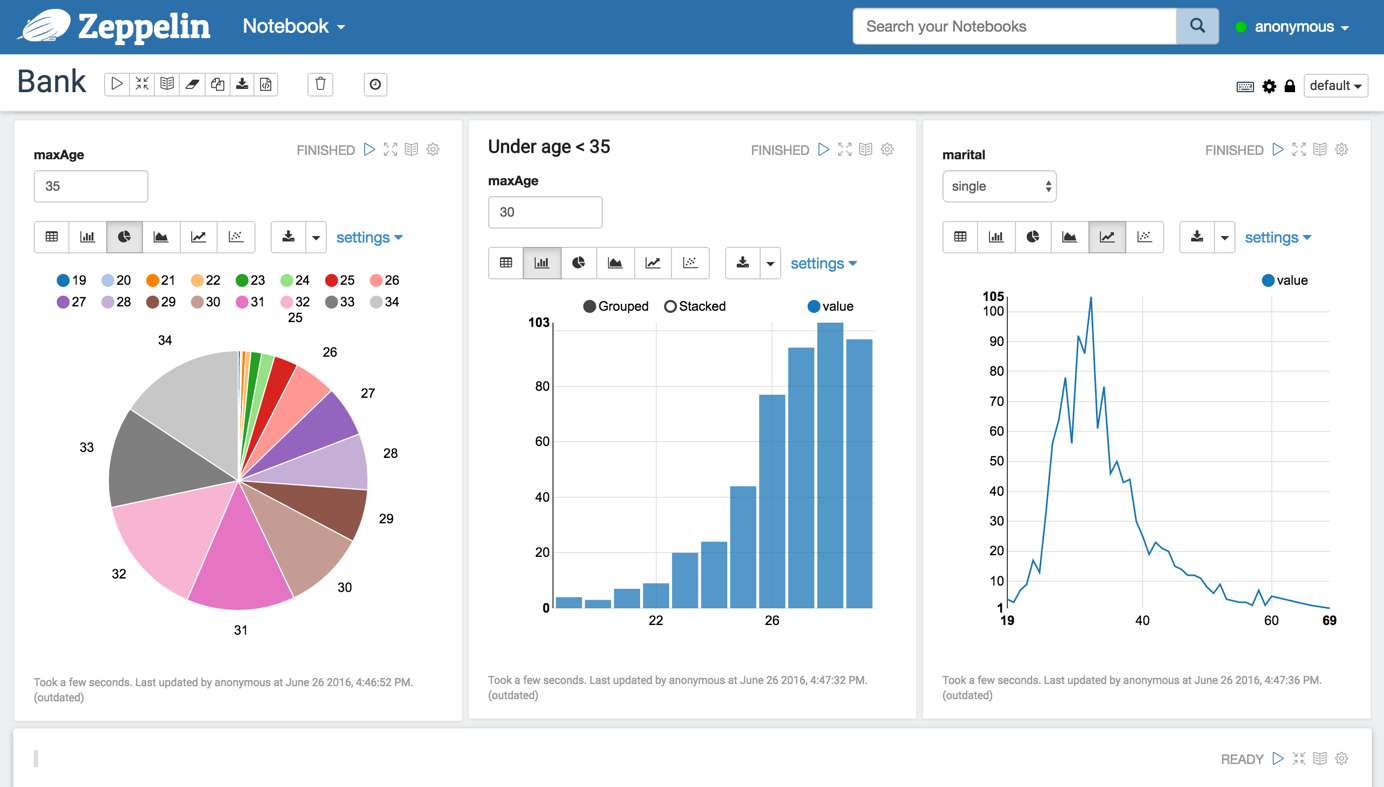

Data professionals continue to use a variety tools. Beaker notebooks support many programming languages, and there are now multiple notebooks that target the Spark community (Spark Notebook, Apache Zeppelin, and Databricks Cloud). However, not all data professionals are using notebooks: they aren't suited for managing complex data pipelines—workflow tools are better suited for that. And data engineers favor tools used by software developers. With deep learning and other new techniques entering the data science and big data communities, we anticipate that existing tools will evolve even more."

"Notebook-style development provides a more exploratory way to write code than with traditional IDEs. Notebook interfaces are comprised of a series of code blocks, called cells, which can stand alone or act in unison. The development process is one of discovery, where a developer experiments in one cell, then can continue to write code in a subsequent cell depending on results from the first. Particularly when analyzing large datasets, this conversational approach allows researchers to quickly discover patterns or other artifacts of the data."