LangChain vs LlamaIndex: Choose the Best Framework for Your AI Applications

LlamaIndex is a powerful tool for data indexing and retrieval, designed to enhance information accessibility. It streamlines the process of efficiently indexing data, making it easier to locate and retrieve relevant information.LangChain, on the other hand, is a versatile framework designed to empower developers to create a wide range of language model-powered applications.

The modular architecture of LangChain enables developers to efficiently design customized solutions for various use cases.

It provides interfaces for prompt management, interaction with language models, and chain management. It also includes memory management to remember previous interactions. LangChain excels at chatbot applications, generating text, answering queries, and language translations.

AI summary (Google)

- Best for:

- Data access: LlamaIndex is a good choice for applications that need to access data efficiently and accurately, such as internal search systems, knowledge management, and enterprise solutions.

- Data integration: LlamaIndex is a bridge between data and language models, and is good for integrating specific, structured data.

- Workflows: LlamaIndex workflows are event-driven, which makes it easier to follow the flow of actions and interactions.

- Data access: LlamaIndex is a good choice for applications that need to access data efficiently and accurately, such as internal search systems, knowledge management, and enterprise solutions.

- Best for:

- Complex interaction: LangChain is good for applications that require complex interaction and content generation, such as customer support, code documentation, and NLP tasks.

- Model interaction: LangChain's model interaction module lets it interact with any language model, managing inputs and extracting information from outputs.

- Complex interaction: LangChain is good for applications that require complex interaction and content generation, such as customer support, code documentation, and NLP tasks.

chatbot - Differences between Langchain & LlamaIndex - Stack Overflow

Docs

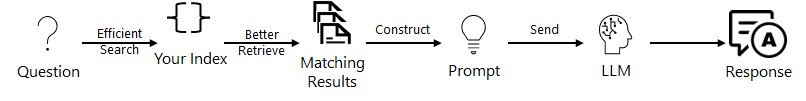

Building an LLM Application - LlamaIndex

By default, LlamaIndex uses a simple in-memory vector store that's great for quick experimentation. They can be persisted to (and loaded from) disk (or vector database)ts.llamaindex.ai

LlamaIndex.TS is the JS/TS version of LlamaIndex,the framework for building agentic generative AI applications connected to your data.

run-llama/llama_index: LlamaIndex is a data framework for your LLM applications @GitHub

Courses:

Course: Build RAG Applications with LlamaIndex and JavaScript [NEW] | Udemy Business

Course: LangChain & LLMs - Build Autonomous AI Tools Masterclass | Udemy Business

JS/TS