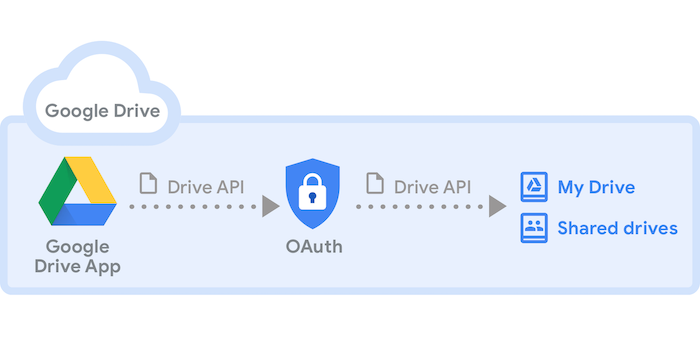

You can either self-host or use our public instance. Everything is open-source, including the full production setup — there’s no ‘open-core’ model here. Check out our GitHub. The map data comes from OpenStreetMap.

Using our public instance is completely free: there are no limits on the number of map views or requests. There’s no registration, no user database, no API keys, and no cookies. We aim to cover the running costs of our public instance through donations.

We also provide weekly full planet downloads both in Btrfs and MBTiles formats.