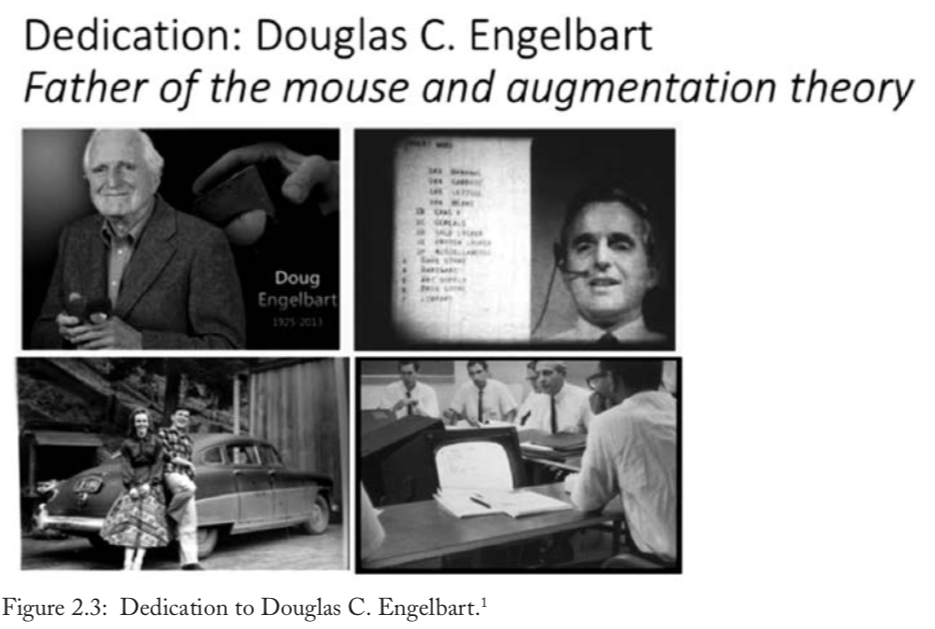

Douglas C. Engelbart: Mother-of-all-demos 50th Anniversary Celebration – Service Science

December 9, 1968.

at an early computer conference in San Francisco, a researcher from SRI (Stanford Research Institute) unveiled what he and his team had created – a view of the future of augmented human performance with advanced technologies in computing and communications. Douglas C. Engelbart and team created what is famously known today as “the mother of all demos.” The text I just typed, the hyperlinks I just used, the mouse I used for positioning and clicking, and the zoom on-line meeting that I participated in last week all owe an intellectual debt to Doug and his team.

1968 “Mother of All Demos” by SRI’s Doug Engelbart and Team - YouTube 5 min highlights

The Mother of All Demos, presented by Douglas Engelbart (1968) - YouTube all 1.5 hours

In the 1960s, Douglas Engelbart led a team at the Stanford Research Institute to develop key computer innovations, including the invention of the computer mouse in 1964. He also pioneered concepts like hypertext, word processing, and collaborative editing, which were demonstrated in the groundbreaking 1968 "Mother of All Demos" presentation

"IA" (Intelligence Augmentation) is term popularized by Douglas Engelbart