Patterns of intelligence solutions on the cloud:

- Intelligent Bots (conversations)

- Machine + Human Intelligence

- Deep Intelligence

- Intelligent Lake

- Intelligence DB

Axes if innovation in Microsoft: Data * Cloud * Intelligence

Joseph Sirosh | LinkedIn

Microsoft Machine Learning & Data Science Summit 2016 | Channel 9

Machine Learning @ 1 million predictions per second and more | Business Excellence

"Combination of in-memory technologies and in-database analytics with R at scale using SQL Server 2016 can make 1 million fraud predictions per second."

Keynote Session: Dr. Edward Tufte - The Future of Data Analysis | Microsoft Machine Learning & Data Science Summit 2016 | Channel 9

Microsoft Data Science Summit: Talking about Bots | Microsoft Machine Learning & Data Science Summit 2016 | Channel 9

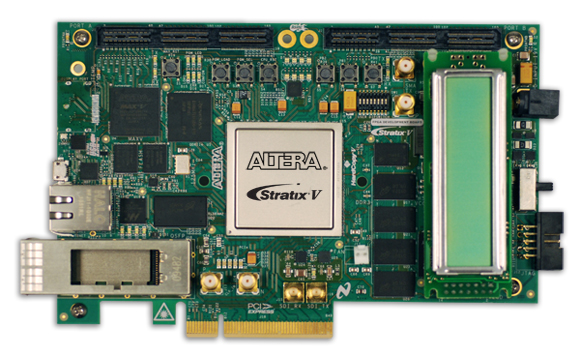

Programmable chips turning Azure into a supercomputing powerhouse | Ars Technica

"Microsoft is adding something new: field programmable gate arrays (FPGAs), highly configurable processors that can be rewired using software in order to provide hardware accelerated implementations of software algorithms.... The result was a 40-fold speed-up compared to a software implementation running on a regular CPU."

"...In addition to their PCIe connection to each hardware server, the FPGA boards were also plumbed directly into the Azure network, enabling them to send and receive network traffic without needing to pass it through the host system's network interface.

That PCIe interface is shared with the virtual machines directly, giving the virtual machines the power to send and receive network traffic without having to bounce it through the host first. The result? Azure virtual machines can push 25 gigabits per second of network traffic, with a latency of 25-50 microseconds. That's a tenfold latency improvement and can be done without demanding any host CPU resources.

No comments:

Post a Comment